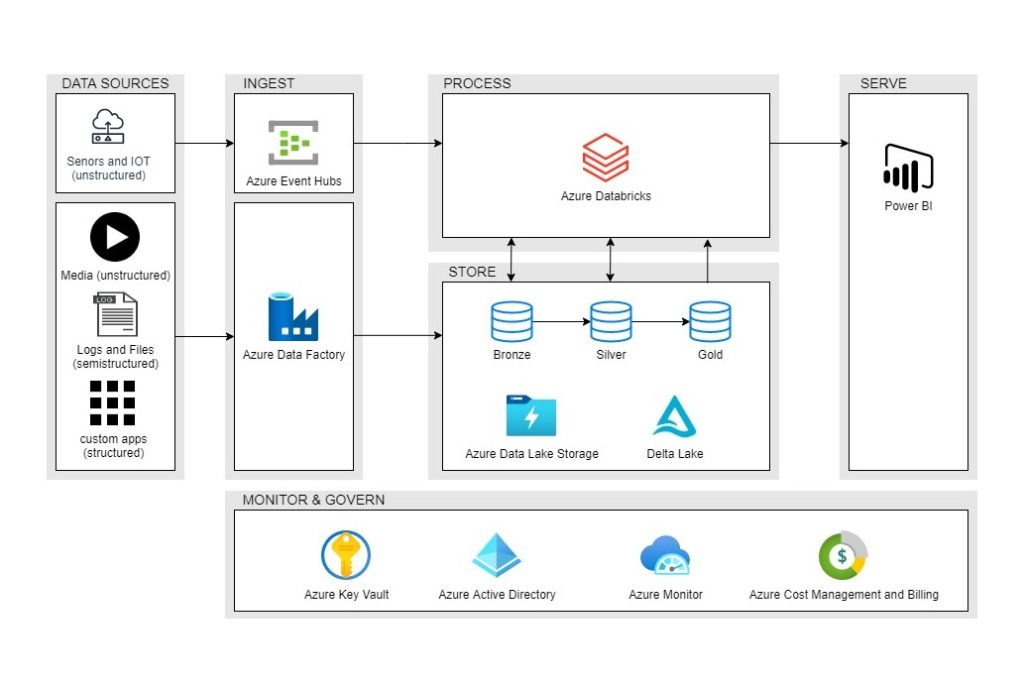

With Microsoft Databricks gaining popularity in the data community, it is worth looking at how Azure Databricks and Delta Lake integrate with other Azure services such as Azure Data Factory and Azure Data Lake and hence, facilitate a modern, scalable, flexible and cost-effective Data and Analytics architecture.

1. Data that needs to be extracted can be arriving in two ways:

2. Raw streaming data is ingested to Azure Databricks using Azure Event Hubs.

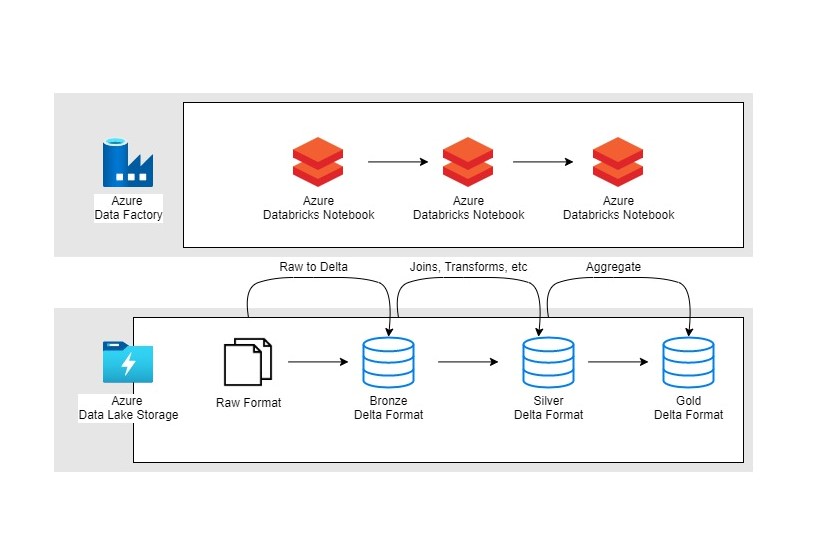

3. Raw batch data is ingested to Azure Data Lake using Azure Data Factory (ADF) pipelines. These data loads can be automated and scheduled using ADF pipelines.

4. The ADF pipelines are also used to schedule and trigger Azure Databricks notebooks. Hence, once the data lands in Azure Data Lake (ADL), Azure Databricks notebook:

5. The aggregated data is then delivered to Analytical tools like PowerBI for analysis purposes and further, generating dashboards and reports.

6. During this whole process, Azure Data Monitor and Governance services can be leveraged to meet the following purposes:

Organisations in the following industries can benefit from the above solution:

Azure Databricks integrates well with other Azure data ecosystem tools. It is a flexible solution that allows the targeting of any use case involving analysing data in large volumes that has a variety of data coming from several data sources in different formats and forms, combining the data for data analysis purposes.

Contact us if you would like to discuss how the implementation of Microsoft Databricks can facilitate a modern data and analytics architecture in your business.

Copyright © Tridant Pty Ltd.